Deepfakes are also used within the degree and you may news to help make practical movies and you can entertaining blogs, which offer the fresh ways to engage audiences. kloverkay90 However, they also render dangers, especially for dispersed incorrect advice, which includes lead to need in control have fun with and you may obvious legislation. To have credible deepfake identification, have confidence in devices and you may guidance of top supply for example universities and you will centered news outlets. Inside light ones inquiries, lawmakers and advocates features needed liability to deepfake porno.

Well-known video | kloverkay90

Within the February 2025, according to online investigation system Semrush, MrDeepFakes got over 18 million check outs. Kim hadn’t seen the video clips away from her to the MrDeepFakes, since the “it’s terrifying to take into consideration.” “Scarlett Johannson becomes strangled in order to dying from the scary stalker” ‘s the identity of a single video clips; various other called “Rape me personally Merry Xmas” have Taylor Quick.

Doing a deepfake to have ITV

The brand new videos had been made by almost cuatro,100 founders, who profited on the unethical—and today unlawful—transformation. By the time a good takedown consult is recorded, the content could have started protected, reposted otherwise embedded across the those sites – some managed overseas or buried inside the decentralized networks. The present day costs provides a network you to definitely snacks signs or symptoms when you are making the fresh damages so you can pass on. It is almost even more tough to identify fakes out of actual video footage since this technology advances, such as as it’s simultaneously becoming less and much more offered to people. Whilst the tech have legitimate programs within the media creation, harmful fool around with, for instance the creation of deepfake porno, is stunning.

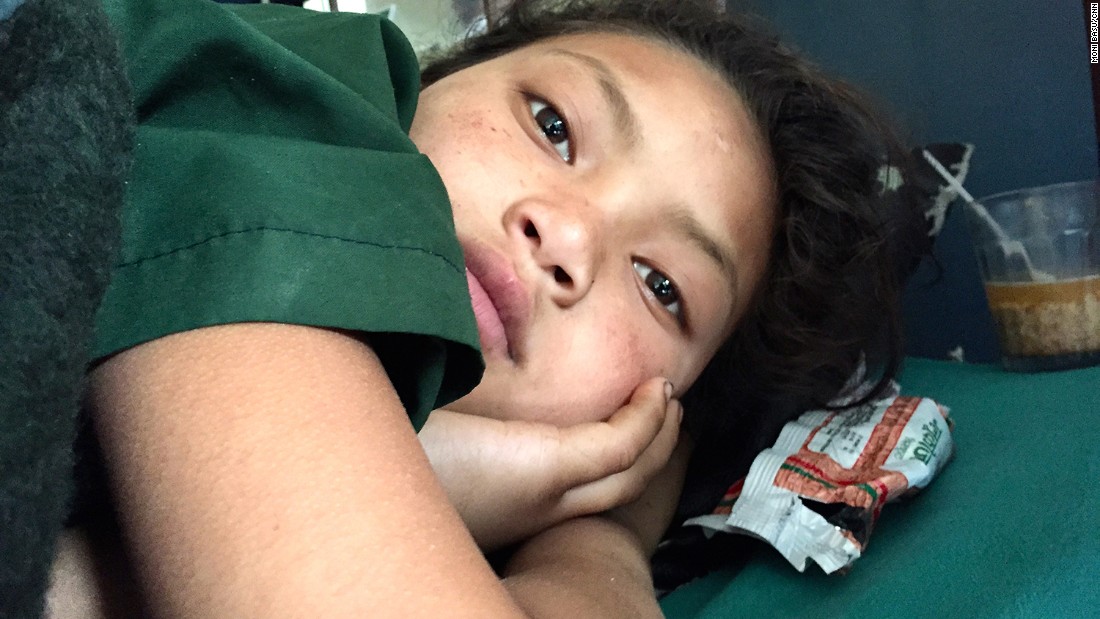

Major technical networks such Yahoo are already taking procedures so you can target deepfake pornography or any other types of NCIID. Yahoo has generated an insurance policy for “unconscious synthetic adult photographs” permitting visitors to query the fresh technology large to help you block on line overall performance displaying them inside the compromising things. It has been wielded against girls as the a weapon away from blackmail, a you will need to wreck the work, and also as a kind of intimate assault. More than 30 women between your age of several and 14 in the an excellent Foreign-language town were recently subject to deepfake porno photographs away from her or him spreading as a result of social networking. Governments international is actually scrambling playing the new scourge from deepfake pornography, and this will continue to flooding the internet while the today’s technology.

- At the least 244,625 movies have been published to reach the top thirty five other sites put upwards sometimes exclusively otherwise partly to help you server deepfake pornography videos within the the past seven many years, according to the researcher, just who expected anonymity to quit becoming targeted on the web.

- It tell you which affiliate try problem solving program items, hiring artists, publishers, developers and appearance engine optimization specialists, and you will soliciting overseas services.

- Her fans rallied to force X, formerly Facebook, or other websites when planning on taking them off yet not ahead of it was seen scores of minutes.

- Thus, the focus of this analysis are the brand new oldest account in the discussion boards, having a person ID away from “1” on the origin code, that has been as well as the merely profile found to hold the brand new combined titles away from personnel and you may manager.

- They came up in the South Korea inside the August 2024, that numerous coaches and you will females college students was victims out of deepfake images produced by users which utilized AI technology.

Discovering deepfakes: Integrity, professionals, and ITV’s Georgia Harrison: Pornography, Energy, Profit

This includes action by companies that server websites and possess search engines, and Yahoo and Microsoft’s Yahoo. Already, Electronic 100 years Copyright Operate (DMCA) grievances would be the number 1 legal procedure that ladies need to get video removed from websites. Steady Diffusion or Midjourney can make a phony alcohol commercial—if you don’t an adult videos for the confronts out of real somebody who have never came across. One of the primary other sites seriously interested in deepfake porn announced one it’s shut down once a significant service provider withdrew its help, effectively halting the brand new site’s functions.

You ought to prove your societal display screen label prior to commenting

Within Q&A, doctoral applicant Sophie Maddocks details the newest expanding dilemma of image-dependent intimate discipline. Just after, Do’s Myspace web page and the social media accounts of some members of the family participants were removed. Manage next travelled to Portugal together with his family, based on reviews printed for the Airbnb, merely back to Canada this week.

Having fun with a good VPN, the new researcher checked out Google queries inside the Canada, Germany, Japan, the usa, Brazil, Southern area Africa, and you will Australia. In most the fresh screening, deepfake websites were prominently shown browsing results. Celebrities, streamers, and you will content founders usually are directed on the video. Maddocks states the brand new spread from deepfakes has been “endemic” which is exactly what of many researchers very first dreaded when the first deepfake video flower to help you stature inside December 2017. The truth away from coping with the fresh invisible chance of deepfake sexual abuse is becoming dawning to your females and ladies.

The way to get Individuals to Display Dependable Guidance On line

Inside your home out of Lords, Charlotte Owen discussed deepfake punishment since the a “the fresh frontier out of physical violence up against women” and necessary creation becoming criminalised. When you’re Uk legislation criminalise sharing deepfake pornography instead consent, they don’t shelter their production. The potential for creation alone implants worry and you may threat to your ladies’s existence.

Created the newest GANfather, an ex Google, OpenAI, Apple, and from now on DeepMind lookup researcher named Ian Goodfellow smooth how to have highly sophisticated deepfakes inside image, video, and you can music (see all of our listing of an educated deepfake instances here). Technologists have likewise showcased the necessity for options for example digital watermarking so you can authenticate news and you can position unconscious deepfakes. Experts have entitled on the organizations performing man-made news products to look at strengthening moral protection. While the technology is natural, the nonconsensual use to manage involuntary adult deepfakes is increasingly common.

To your mixture of deepfake video and audio, it’s an easy task to become misled by the illusion. But really, outside the controversy, you will find confirmed self-confident apps of the technology, away from amusement in order to knowledge and medical care. Deepfakes shadow right back as early as the newest 1990s having experimentations inside the CGI and you may reasonable people photos, nonetheless they very came into on their own to the creation of GANs (Generative Adversial Communities) on the middle 2010s.

Taylor Swift try famously the goal out of a throng out of deepfakes a year ago, as the sexually explicit, AI-generated images of one’s musician-songwriter bequeath across the social media sites, such as X. The site, dependent inside the 2018, means the newest “most noticeable and you can mainstream marketplace” to have deepfake pornography from celebrities and individuals and no societal presence, CBS News records. Deepfake pornography refers to digitally altered photographs and you may video clips where men’s deal with try pasted onto other’s looks using fake intelligence.

Message boards on the website invited users to find market customized nonconsensual deepfake content, along with speak about practices to make deepfakes. Video published to your tube site try revealed strictly while the “celebrity posts”, but forum posts provided “nudified” images away from private somebody. Community forum professionals described sufferers because the “bitches”and “sluts”, and lots of debated the womens’ behavior greeting the fresh shipment away from intimate articles presenting her or him. Users whom expected deepfakes of the “wife” otherwise “partner” have been led to message founders personally and you may promote on the most other programs, such as Telegram. Adam Dodge, the brand new founder away from EndTAB (End Tech-Enabled Abuse), told you MrDeepFakes is a keen “early adopter” out of deepfake technical you to definitely goals women. He told you it had changed of videos discussing platform to a training ground and you will market for performing and you will exchange inside the AI-powered intimate punishment matter from one another celebs and private people.